Researchers at Carnegie Mellon University and Texas Instruments have seen the future, or at least one major component of it: Gaze detection.

The murky-sounding term is actually quite simple: Most humans with functioning eyesight know where to meet the gaze of someone else they are trying to interact with, whether it be a friend or foe. Whenever a person looks at a conversation partner and the partner typically looks back at them, their eyeines cross at some point in the space separating them.

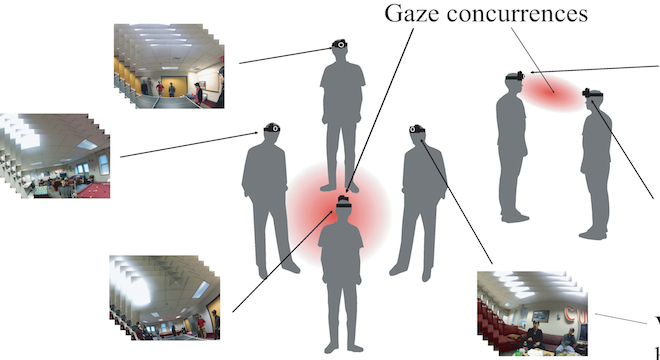

The area where the gaze, or eyeline, of two or more people overlaps in free space called the “gaze concurrence.”

Researchers at Carnegie Mellon University’s Robotics Institute and Texas Instruments in mid-December announced that they had pioneered a new computer algorithm that detects with astonishing accuracy where two or more persons’ gazes meet in 3D free space.

The work, which was funded by Samsung, the South Korean global electronics giant, involved putting head-mounted video cameras on groups of people and using a custom computer program to spot where the wearers were looking at any given time.

Carnegie Mellon’s team, which included three researchers, one from Texas Instruments, was able to use their system in three test cases, as a news release from the institute explains: “a meeting involving two work groups; a musical performance; and a party in which participants played pool and ping-pong and chatted in small groups.” In all three cases, the system was “quite accurate,” as Yaser Sheikh, assistant research professor at the CMU Robotics Institute, wrote to TPM via email.

“It can get to within a few centimeters of the correct gaze concurrence,” Sheikh said. “We call the algorithm ‘3D social saliency estimation’ as it finds what areas are important to a particular social group of people.”

Here’s a video CMU released of one of the tests, the “party” scenario:

As for specific real-world use cases for the still in-development system, Sheikh told TPM that “there are many interesting applications that we’re currently pursuing,” but two main implementations he and his colleagues were investigating concerned helping diagnose autism in children and producing a “final cut” video of footage captured by multiple head-mounted video cameras, which Sheikh said was called the “Alfred Hitchcock” project.

But other potential uses are myriad, including military, law enforcement and security surveillance, allowing fighters and security personnel to better coordinate their activities. As Sheikh explained:

“Many soldiers have cameras attached to their helmets. These cameras are largely used as documentation devices for future reference and review. The great appeal here is that our technology could be used for realtime coordination and planning for teams like soldiers or even players on a team. ”

CMU’s team owns the intellectual property rights to the system and is currently focused on research more than commercialization, but “we’re always interested in getting our technology out,” Sheikh stated.

Still, Sheikh noted that now that the hard work of developing the basic algorithm for gaze detection was done, the next frontier was figuring out what to use it for and how to use it.

“This is largely an unexplored space,” Sheikh told TPM. “There has been some attention to this idea from sociologists and psychologies, but reliable tools to measure gaze intersections in 3D really have not existed prior to this.”

But CMU’s team on the project fully expects that wearable cameras with tiny computers behind them, such as Google’s upcoming computerized glasses (Project Glass), will be one of the next most widely adopted consumer devices in the near term.

“Yes, Google Glass is an example of the next great change in how we interact with technology: wearable sensors, displays, and computation,” Sheikh said. “Just as nearly everyone has a cellphone with a camera today, we expect that shortly wearable devices will also be as ubiquitous.”