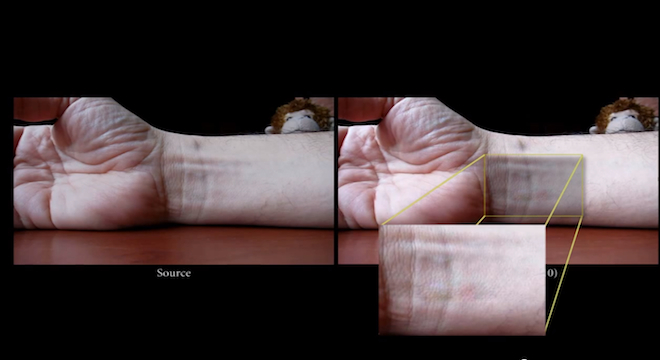

Seeing a person’s pulse through their skin seems like it should be a power relegated to superhero comics. But researchers at MIT have developed new video processing software that does just that and more — allowing an observer to identify tiny, otherwise imperceptible changes, such as a person’s breathing, blood flow and even the slight vibrating of still guitar strings, just from a few frames of video footage seconds apart.

The resulting images created by the technique, called Eulerian Video Magnification are eerily similar to the popular animated GIF images found on the Web today.

Check out MIT’s video of the results below:

But the technology could also be used in far more practical, potentially even life-saving applications, according to the MIT researchers who devised it.

“This can be implemented on a tablet or smart phone to give a video magnification window on the world,” wrote MIT professors William Freeman, Fredo Durand and John Guttag, in a joint statement to TPM. “Potentially, physicians could identify symptoms of disease more easily.”

Indeed, the researchers soon plan to release the source code, allowing others to use it.

The system works by selectively amplifying color variation between pixels in the video footage. It can also be applied to a still camera if the images are taken shortly after one another, on “burst” mode.

“The technique works even on a conventional, inexpensive web cam,” the scientists told TPM.

The first prototype was developed in six months, in order to be submitted in an annual computer graphics conference in Los Angeles in August called the SIGGRAPH.

“After getting it to work for visualizing the human pulse, we then realized we could also amplify motion signals using a very similar technique,” the researchers explained. “We noticed motion amplifications in our amplified human pulse signal, so we went back to understand that, and then figured out how to control and exploit it.”

Freeman, Durand and Guttag are all members of MIT’s Computer Science and Artificial Intelligence Lab (CSAIL), the same division of the university responsible for recent advances in a “learning” surveillance system, although the two projects are distinct and are being worked on by different researchers.

Like that project, the Eulerian Video Magnification system is funded by the U.S. Defense Department, specifically the Defense Advanced Research Projects Agency (DARPA), the far-flung Pentagon unit that developed the prototype of the Internet back in the early 1960s. MIT also told TPM that graphics company Nvidia and Taiwanese laptop manufacturing giant Quanta Computer are involved.

The researchers told TPM they did not see any potential possibilities for misuse of their system.

“If any technology is useful, one might be able to construct a scenario where it was misused, but it’s hard to think of one for this technology,” they wrote.

(H/T: Buzzfeed FWD)